Introduction

What is PSyKI?

PSyKI (Platform for Symbolic Knowledge Injection) is a python library for symbolic knowledge injection (SKI). SKI is a particular subclass of neuro-symbolic (NeSy) integration techniques. PSyKI offers SKI algorithms (a.k.a. injectors) along with quality of service metrics (QoS).

Here you can have a look at the original paper for more details.

If you use one or more of the features provided by PSyKI, please consider citing this work.

Bibtex:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

@incollection{psyki-extraamas2022,

author = {Magnini, Matteo and Ciatto, Giovanni and Omicini, Andrea},

booktitle = {Explainable and Transparent AI and Multi-Agent Systems},

chapter = 6,

dblp = {conf/atal/MagniniCO22},

doi = {10.1007/978-3-031-15565-9_6},

editor = {Calvaresi, Davide and Najjar, Amro and Winikoff, Michael and Främling, Kary},

eisbn = {978-3-031-15565-9},

eissn = {1611-3349},

iris = {11585/899511},

isbn = {978-3-031-15564-2},

issn = {0302-9743},

keywords = {Symbolic Knowledge Injection, Explainable AI, XAI, Neural Networks, PSyKI},

note = {4th International Workshop, EXTRAAMAS 2022, Virtual Event, May 9--10, 2022, Revised Selected Papers},

pages = {90--108},

publisher = {Springer},

scholar = {7587528289517313138},

scopus = {2-s2.0-85138317005},

series = {Lecture Notes in Computer Science},

title = {On the Design of {PSyKI}: a Platform for Symbolic Knowledge Injection into Sub-Symbolic Predictors},

url = {https://link.springer.com/chapter/10.1007/978-3-031-15565-9_6},

urlpdf = {https://link.springer.com/content/pdf/10.1007/978-3-031-15565-9_6.pdf},

volume = 13283,

wos = {000870042100006},

year = 2022

}

Overview

Premise: the knowledge that we consider is symbolic, and it is represented with formal logic. In particular, we use the Prolog formalism to express logic rules.

Note: some aspects of the Prolog language are not fully supported. Generally, every SKI method specifies which kind of knowledge can support.

SKI workflow

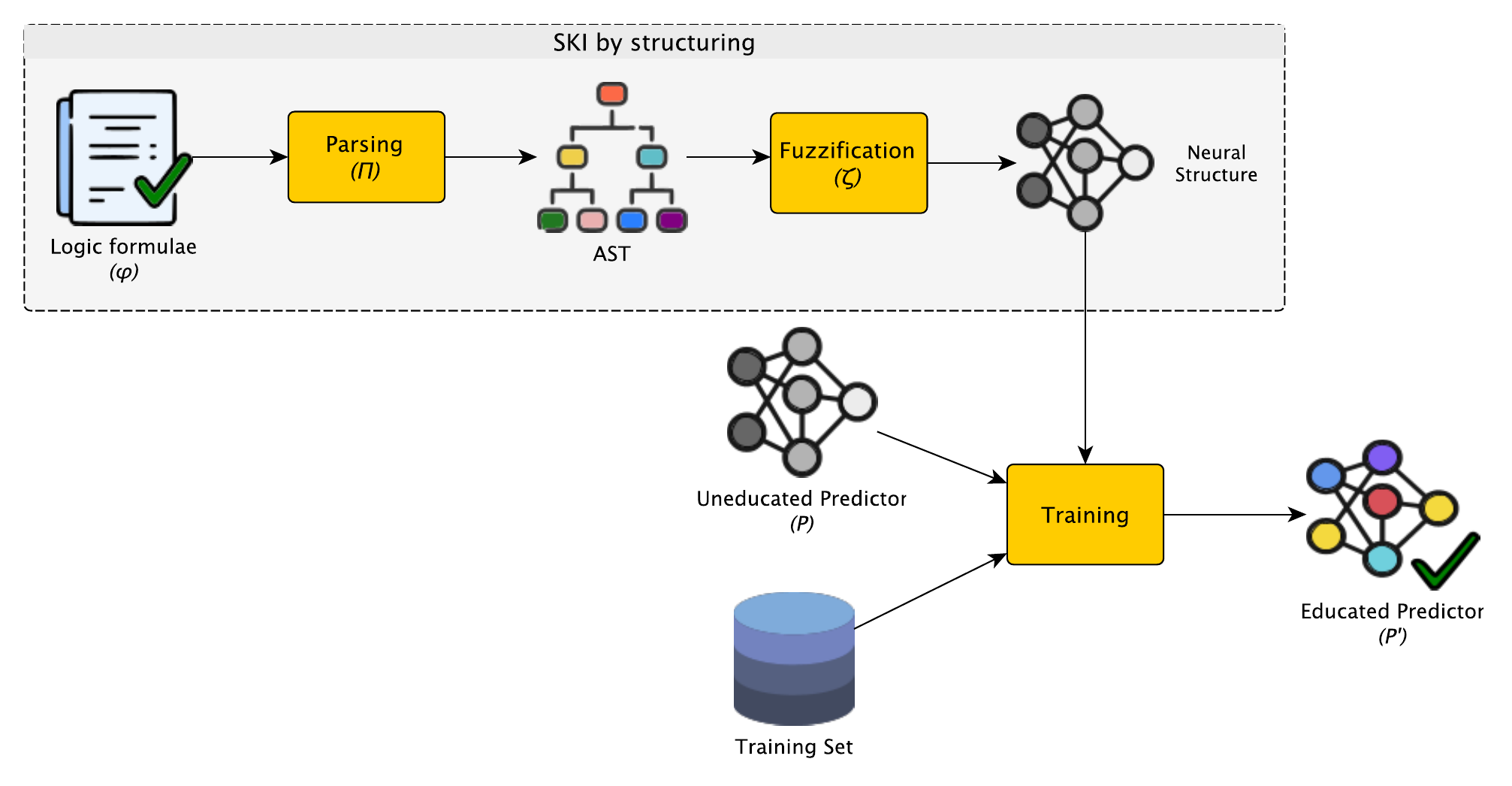

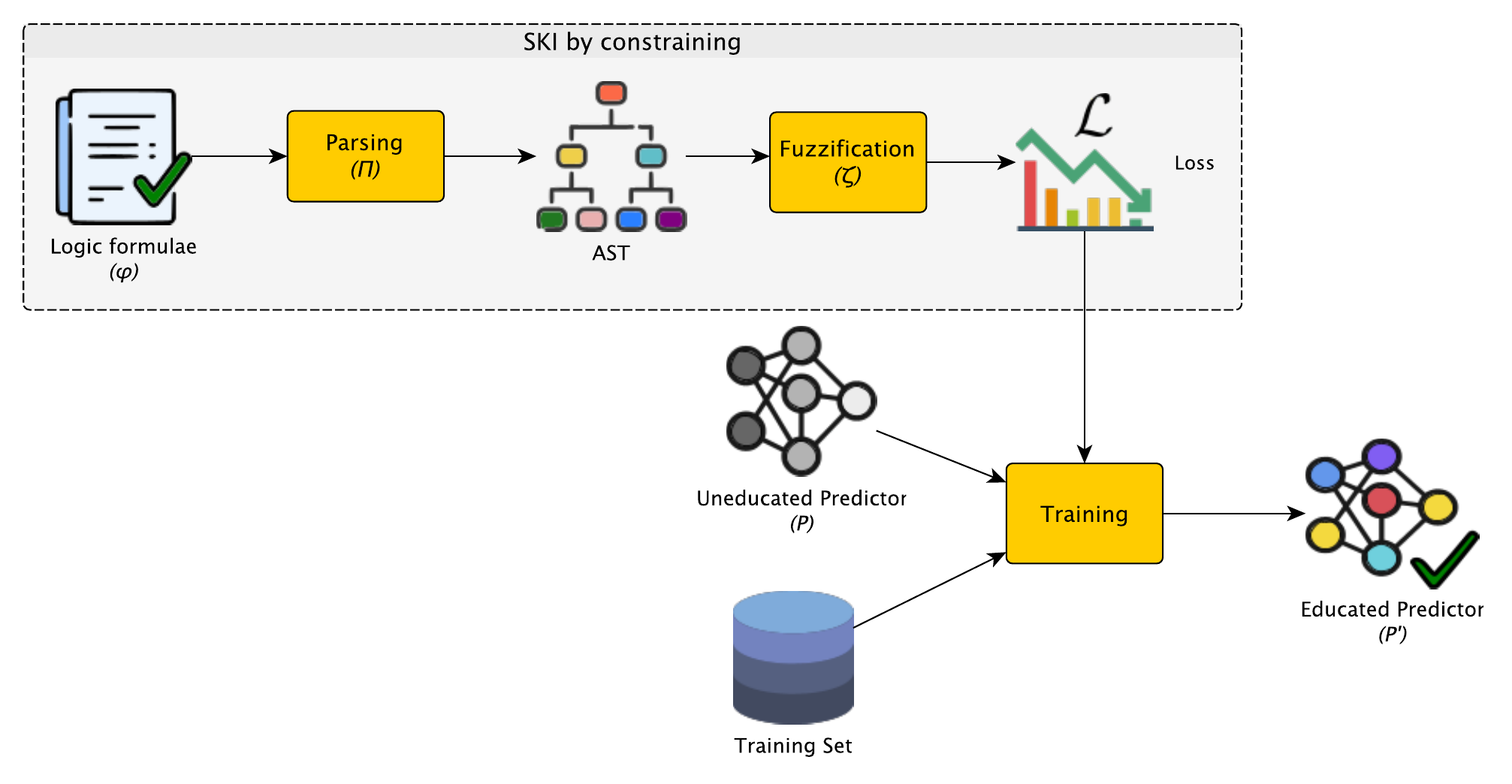

SKI methods require common steps for knowledge preprocessing. First, the knowledge is parsed into a visitable data structure (e.g., abstract syntax tree). Then, it is fuzzified. This means that from a crispy domain – logic rules can be only true or false – the knowledge becomes fuzzy — there can be multiple degree of truth. Finally, the knowledge can be injected into the neural network (NN).

In the literature, there are mainly two families of SKI methods:

- structuring, the knowledge is mapped into new neurons and connections of the neural network. The new components mimic the behaviour of the prior knowledge. After the injection, the network is still trained (knowledge fitting).

![SKI structuring]()

- constraining, the knowledge is embedded in the loss function. Typically, a cost factor is added to the loss function. The cost factor is higher the higher is the violation of the knowledge. In this way, the network learn to avoid predictions that violates the prior knowledge during the training phase.

![SKI constraining]()

Architecture

Class diagram representing the relations between

Injector, Theory and Fuzzifier classes

The core abstractions of PSyKI are the following:

Injector: a SKI algorithm;Theory: symbolic knowledge plus additional information about the domain;Fuzzifier: entity that transforms (fuzzify) symbolic knowledge into a sub-symbolic data structure.

The class Theory is built upon the symbolic knowledge and the metadata of the dataset (extracted by a Pandas DataFrame). The knowledge can be generated by an adapter that parses the Prolog theory (e.g., a .pl file, a string) and generates a list of Formula objects. Each Injector has one Fuzzifier. The Fuzzifier is used to transform the Theory into a sub-symbolic data structure (e.g., ad-hoc layers of a NN). Different fuzzifiers encode the knowledge in different ways.

Name convention:

- rule is a single logic clause;

- knowledge is the set of rules;

- theory is the knowledge plus metadata.

Features

Injectors

- KBANN: Knowledge-Based Artificial Network Networks (see post)

- KINS: Knowledge Injection via Network Structuring (see post)

- KINS: Knowledge Injection via Lambda Layer (see post)

QoS

- Memory Footprint

- Energy Consumption

- Latency

- Data Efficiency

For more details about QoS have a look at the corresponding post.

Additional information

Demo

See the demo post to learn how to quickly use PSyKI in your projects.

Contributors

See the contributors post.

Developers

External contributions are welcome! Working with PSyKI codebase requires a number of tools to be installed:

- Python 3.9+

- JDK 11+ (please ensure the

JAVA_HOMEenvironment variable is properly configured) - Git 2.20+

Develop PSyKI with PyCharm

To participate in the development of PSyKI, we suggest the PyCharm IDE.

Importing the project

- Clone this repository in a folder of your preference using

git_cloneappropriately - Open PyCharm

- Select

Open - Navigate your file system and find the folder where you cloned the repository

- Click

Open

Developing the project

Contributions to this project are welcome. Just some rules:

- We use git flow, so if you write new features, please do so in a separate

feature/branch - We recommend forking the project, developing your stuff, then contributing back vie pull request

- Commit often

- Stay in sync with the

develop(ormain) branch (pull frequently if the build passes) - Do not introduce low quality or untested code

Issue tracking

If you meet some problem in using or developing PSyKI, you are encouraged to signal it through the project “Issues” section on GitHub.